目标

安装、测试与简单使用dataX,本文基于CentOS7 x64实现

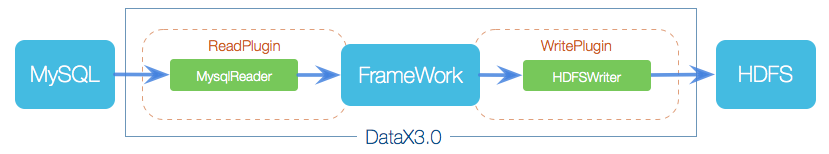

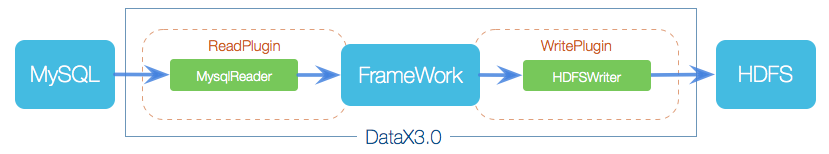

框架设计

实现

一、环境准备

1. Python

CentOS7 x64自带Python2环境,不需要额外安装,查看Python版本:python -V,这里V大写

如果需要可以选装pip

1

2

| yum -y install epel-release

yum install python-pip

|

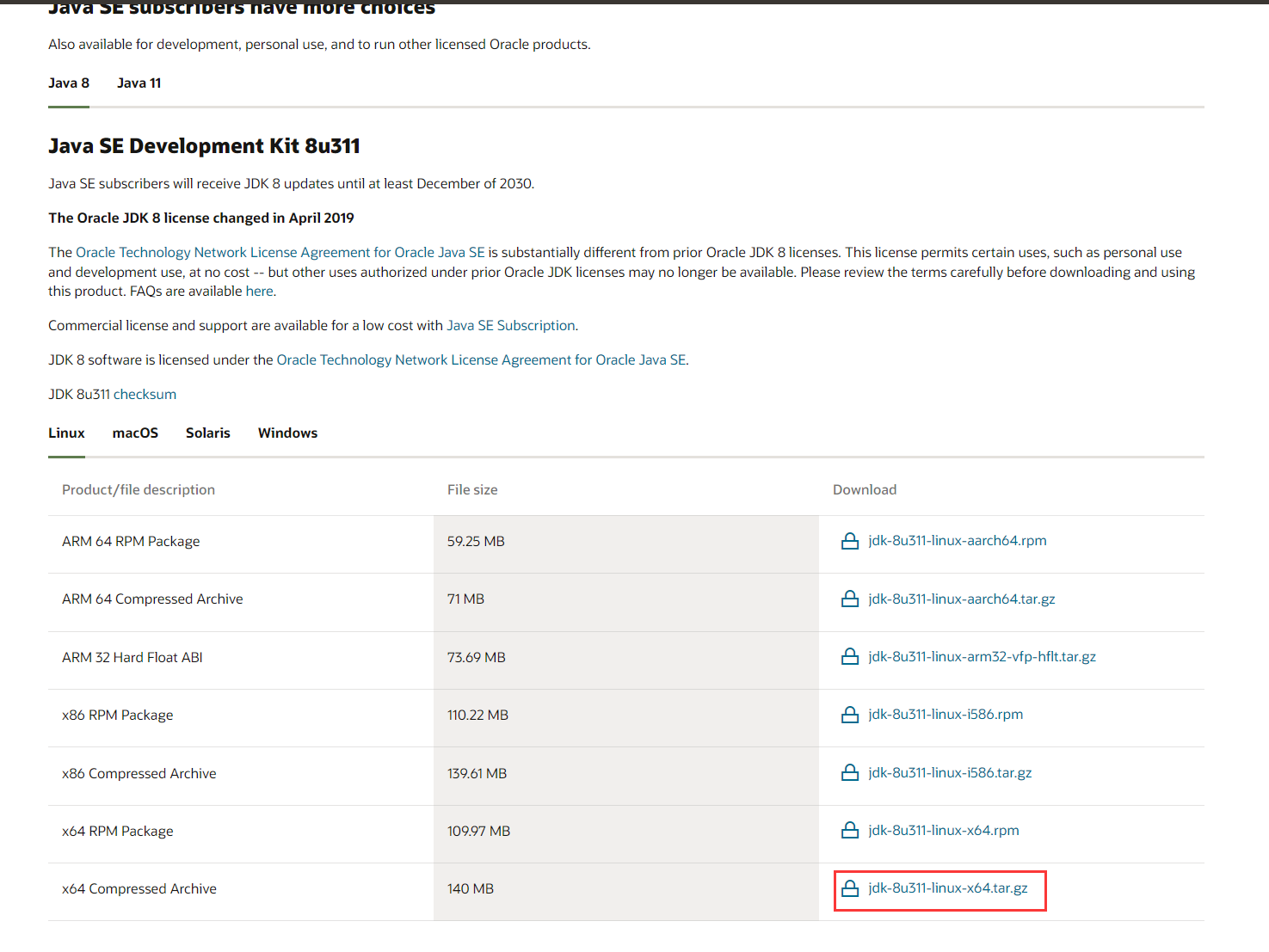

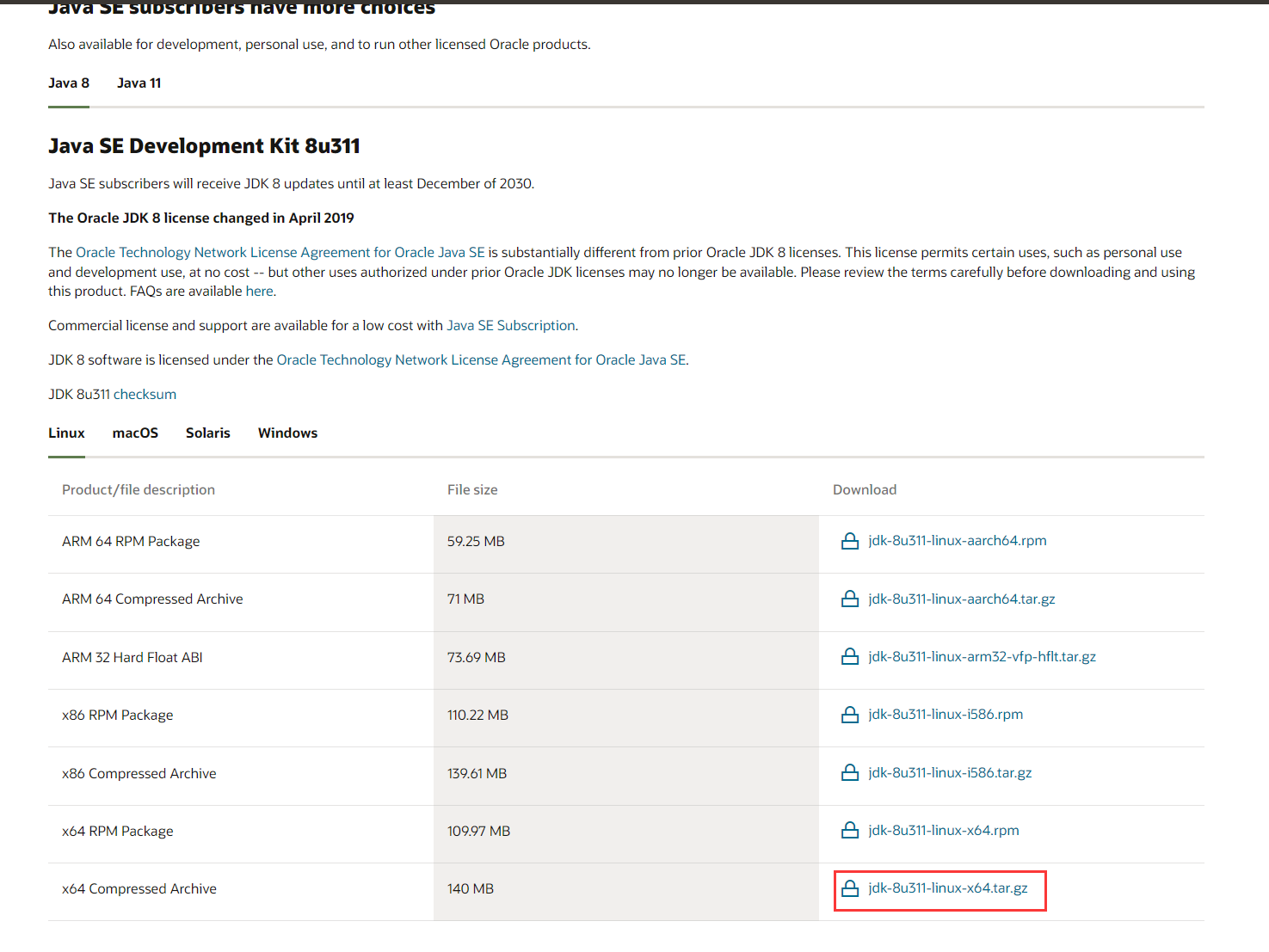

2. JDK1.8

2.1 下载jdk-8u311-linux-x64

官方下载链接(需要注册,可能网速慢):

https://www.oracle.com/java/technologies/downloads/

百度网盘:

链接:https://pan.baidu.com/s/176N837BQXyUoIt7HvF0c0A

提取码:0ld6

2.2 安装

把下载的jdk上传到该目录,然后解压

1

2

3

| cd /export/server/

tar -zxvf jdk-8u311-linux-x64.tar.gz

rm -f jdk-8u311-linux-x64.tar.gz # 可以不删

|

编辑环境变量

1

2

| yum -y install vim # 如果已经有了就不用了

vim /etc/profile

|

结尾追加

1

2

3

| export JAVA_HOME=/export/server/jdk1.8.0_311

export PATH=$PATH:$JAVA_HOME/bin

export CALSSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

|

使生效

查看JDK版本

二、安装dataX

1. 下载安装

1

2

3

4

5

6

7

| yum -y install wget # 如果已经有了就不用了

mkdir -p /export/software

cd /export/software/

wget http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

tar -zxvf datax.tar.gz

rm -rf datax/plugin/*/._* # 这里不删的话,运行会报错,请自行斟酌,新版可能已经解决这个问题

rm -f datax.tar.gz # 可以不删

|

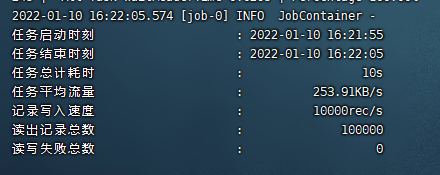

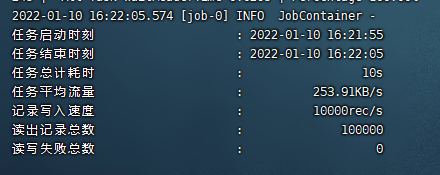

2. 测试

1

2

| cd /export/software/datax/

python bin/datax.py job/job.json

|

运行结果

三、简单使用

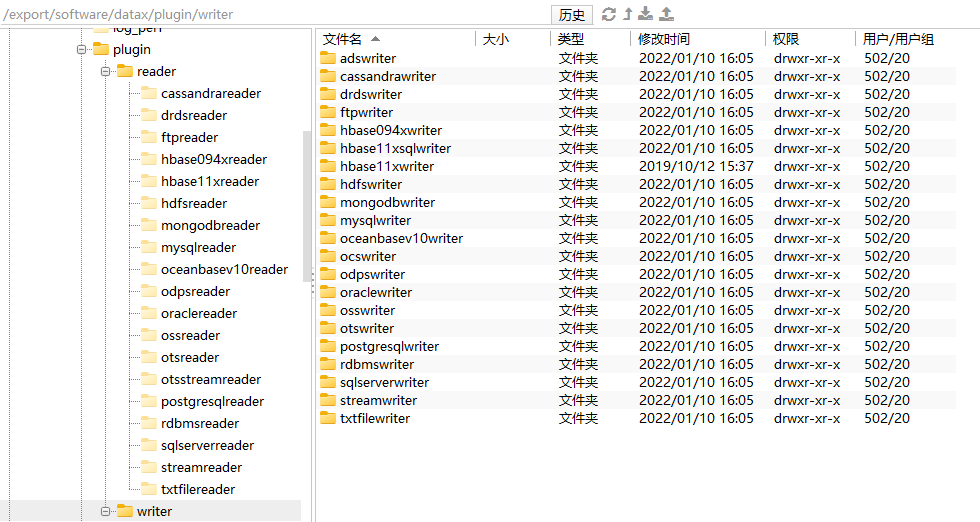

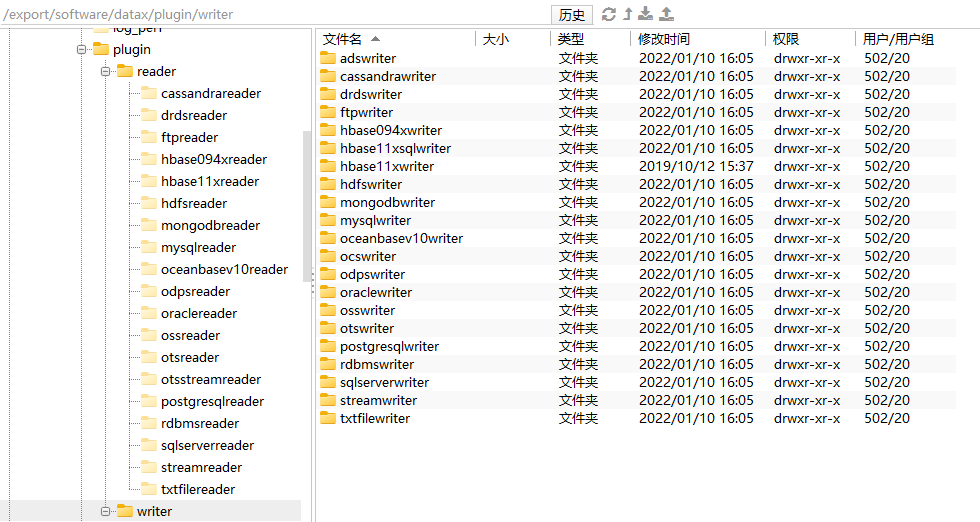

1. 支持

dataX支持数据如下图(来自官方)

| 类型 |

数据源 |

Reader(读) |

Writer(写) |

文档 |

| RDBMS 关系型数据库 |

MySQL |

√ |

√ |

读 、写 |

|

Oracle |

√ |

√ |

读 、写 |

|

OceanBase |

√ |

√ |

读 、写 |

|

SQLServer |

√ |

√ |

读 、写 |

|

PostgreSQL |

√ |

√ |

读 、写 |

|

DRDS |

√ |

√ |

读 、写 |

|

通用RDBMS(支持所有关系型数据库) |

√ |

√ |

读 、写 |

| 阿里云数仓数据存储 |

ODPS |

√ |

√ |

读 、写 |

|

ADS |

|

√ |

写 |

|

OSS |

√ |

√ |

读 、写 |

|

OCS |

|

√ |

写 |

| NoSQL数据存储 |

OTS |

√ |

√ |

读 、写 |

|

Hbase0.94 |

√ |

√ |

读 、写 |

|

Hbase1.1 |

√ |

√ |

读 、写 |

|

Phoenix4.x |

√ |

√ |

读 、写 |

|

Phoenix5.x |

√ |

√ |

读 、写 |

|

MongoDB |

√ |

√ |

读 、写 |

|

Hive |

√ |

√ |

读 、写 |

|

Cassandra |

√ |

√ |

读 、写 |

| 无结构化数据存储 |

TxtFile |

√ |

√ |

读 、写 |

|

FTP |

√ |

√ |

读 、写 |

|

HDFS |

√ |

√ |

读 、写 |

|

Elasticsearch |

|

√ |

写 |

| 时间序列数据库 |

OpenTSDB |

√ |

|

读 |

|

TSDB |

√ |

√ |

读 、写 |

参考相关文档编写json即可实现,可以在【/export/software/datax/plugin】目录下找到名字

2. 获取模板

例如想要实现Oracle->HDFS,获取模板命令如下:

1

2

| cd /export/software/datax/bin/

python datax.py -r oraclereader -w hdfswriter

|

得到如下模板

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| {

"job": {

"content": [

{

"reader": {

"name": "oraclereader",

"parameter": {

"column": [],

"connection": [

{

"jdbcUrl": [],

"table": []

}

],

"password": "",

"username": ""

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [],

"compress": "",

"defaultFS": "",

"fieldDelimiter": "",

"fileName": "",

"fileType": "",

"path": "",

"writeMode": ""

}

}

}

],

"setting": {

"speed": {

"channel": ""

}

}

}

}

|

3. 从Oracle读取

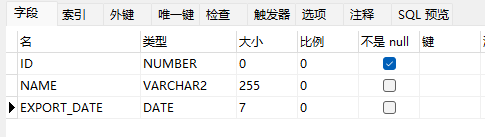

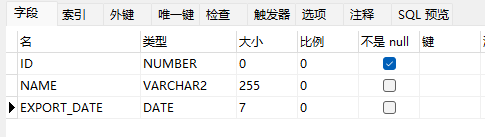

新建用于测试的表如图,并造几条数据

参考Oracle读-文档编辑json文本如下,刚开始尝试可以先打印结果,确认成功之后再写HDFS

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| {

"job": {

"content": [

{

"reader": {

"name": "oraclereader",

"parameter": {

"column": ["ID","NAME","EXPORT_DATE"],

"connection": [

{

"jdbcUrl": [

"jdbc:oracle:thin:@localhost:1521:orcl"

],

"table": [

"test"

]

}

],

"password": "test",

"username": "test"

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

|

把上边的文本写入【/export/software/datax/job/oracle2stream.json】文件中,然后

1

2

| cd /export/software/datax/

python bin/datax.py job/oracle2stream.json

|

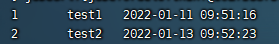

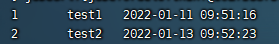

可以在控制台看到成功打印之前造的数据

扩展:这里的【table】可以写多个表结构相同的表名,最后写入HDFS也会写入多个文件

4. 写入HDFS

在HDFS新建目录datax,用于接收数据,参考HDFS写-文档继续编辑上边的json文本如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

| {

"job": {

"content": [

{

"reader": {

"name": "oraclereader",

"parameter": {

"column": ["ID","NAME","EXPORT_DATE"],

"connection": [

{

"jdbcUrl": [

"jdbc:oracle:thin:@localhost:1521:orcl"

],

"table": [

"test"

]

}

],

"password": "test",

"username": "test"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://localhost:9000",

"fileType": "text",

"path": "/datax",

"fileName": "test",

"column": [

{

"name": "ID",

"type": "INT"

},

{

"name": "NAME",

"type": "VARCHAR"

},

{

"name": "EXPORT_DATE",

"type": "TIMESTAMP"

}

],

"writeMode": "append",

"fieldDelimiter": "\t"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

|

把上边的文本写入【/export/software/datax/job/oracle2hdfs.json】文件中,然后

1

2

| cd /export/software/datax/

python bin/datax.py job/oracle2hdfs.json

|

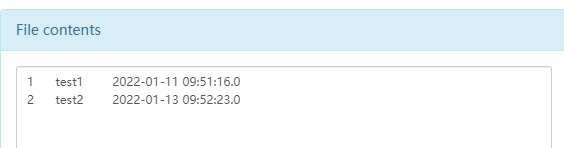

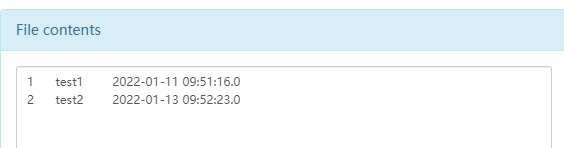

可以在HDFS看到之前造的数据

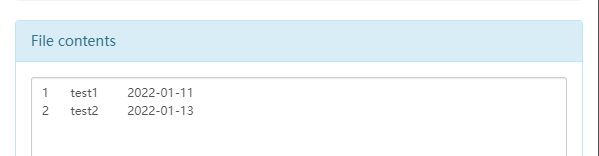

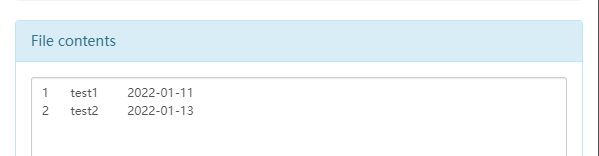

如果在writer配置中【EXPORT_DATE】字段设置type为date,则日期后的时分秒会丢失,如下图

5. HDFS->Oracle

参考HDFS读-文档和Oracle写-文档编辑json文本如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

| {

"job": {

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/datax/*",

"defaultFS": "hdfs://localhost:9000",

"fileType": "text",

"column": [

{

"index": 0,

"type": "long"

},

{

"index": 1,

"type": "string"

},

{

"index": 2,

"type": "date"

}

],

"fieldDelimiter": "\t"

}

},

"writer": {

"name": "oraclewriter",

"parameter": {

"column": [

"ID",

"NAME",

"EXPORT_DATE"

],

"connection": [

{

"jdbcUrl": "jdbc:oracle:thin:@localhost:1521:orcl",

"table": [

"test"

]

}

],

"password": "test",

"username": "test"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

|

把上边的文本写入【/export/software/datax/job/hdfs2oracle.json】文件中,然后

1

2

| cd /export/software/datax/

python bin/datax.py job/hdfs2oracle.json

|